The proliferation of small, low-power machine learning (ML) chips, generally referred to as tinyML, has paved the way for embedding more powerful data-analytics processing into battery-powered devices that continuously sense the environment for data: smart home security systems that can discern a glass break from a dog bark, smart earbuds that respond accurately to voice commands, or smart sensors that can detect a change in vibration indicative of imminent machine failure.

While designers have developed a range of new architectures and technologies to deliver tinyML chips, they’ve done it with one goal in mind: deliver powerful, efficient edge processing at a low-enough power to maintain battery life. Since analog is generally more power-efficient than digital at certain computational tasks, one recent approach to reducing power draw is to implement analog circuitry within the chip — a strategy often characterized as analog computing. However, the degree to which analog circuitry is used varies greatly among tinyML chips and ultimately changes the functionality of the chip and where it fits within the greater always-on system architecture. This has spawned confusion in the marketplace, making it difficult for designers to choose the best analog computing solution for a particular application.

What is analog computing and what do engineers need to know about it so they can make sound decisions during product development to differentiate their battery-operated always-on devices?

What is analog computing and what do engineers need to know about it so they can make sound decisions during product development to differentiate their battery-operated always-on devices?

Analog computing for inferencing in the digital domain

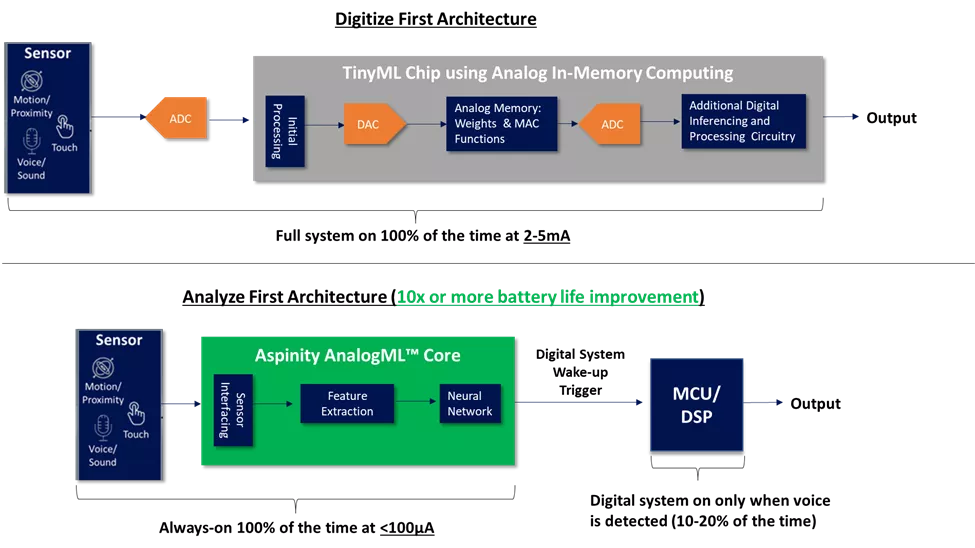

One tinyML chip segment leverages analog circuitry to perform some neural network functions, such as the multiple-accumulate operation (MAC). While this is analog computing in the broadest sense, it’s far more specific to call it “analog in-memory computing” because the analog computing on these chips only occurs within the memory elements. Because these tinyML chips are still clocked processors that are mostly digital, they require not only digital sensor input but on-chip data conversion from digital to analog and back again for any computations performed in analog circuitry. This provides a chip-level solution that incrementally reduces system power.

But the solution is also a traditional digitize-first architecture that wastes significant system power immediately digitizing all data, relevant or not, before any real analysis takes place in the digital domain. If we want to make a meaningful impact on battery life, we must look to a more complete type of analog computing that addresses the efficiency of the whole always-on system and not just the efficiency of one chip.

Analog computing for inferencing in the analog domain

Today there’s a much more complete implementation of analog computing that goes beyond integrating a small amount of analog circuitry to perform certain chip functions: analogML.

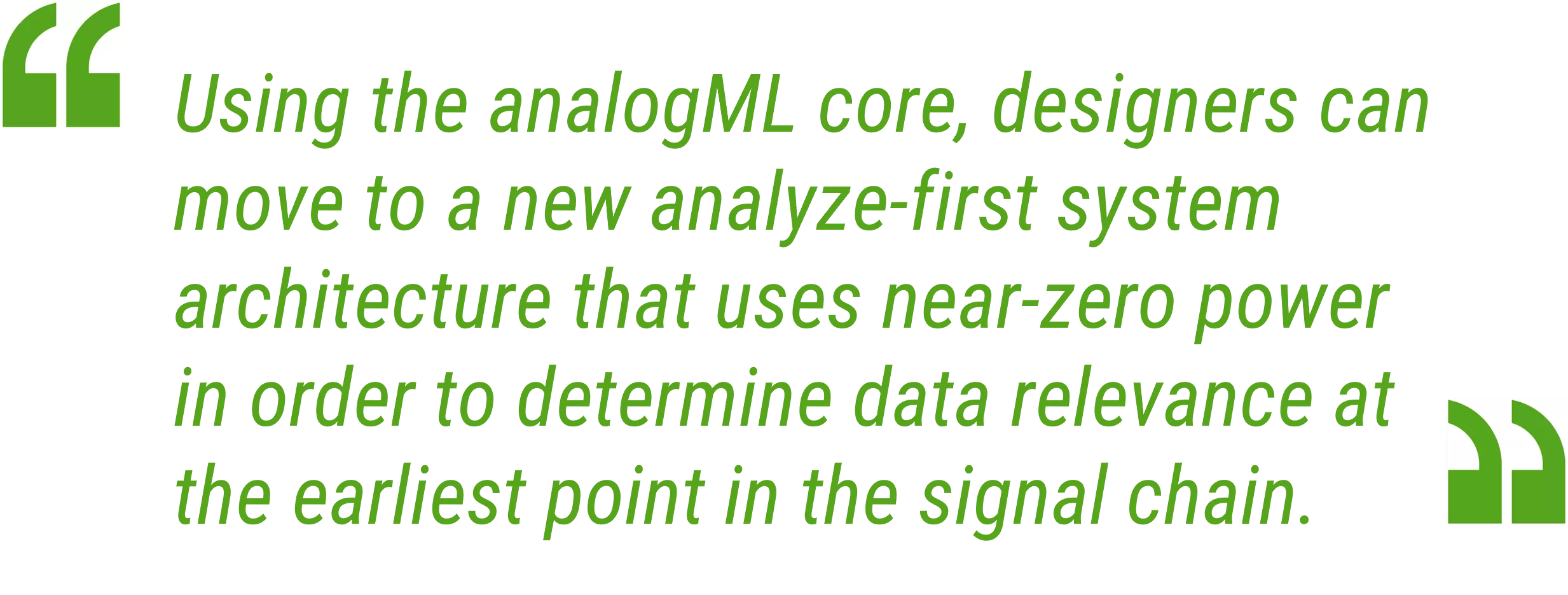

The analogML core is a complete analog processing chip that pulls a higher level of intelligence and inferencing into the analog domain. Using the analogML core, designers can move to a new analyze-first system architecture that uses near-zero power in order to determine data relevance at the earliest point in the signal chain, while the data are still analog, and ultimately keep downstream higher-power digital processors off unless additional, more detailed analysis is required. This cascaded approach to machine learning eliminates the wasteful digital processing of irrelevant data and delivers a system-level power efficiency that can extend battery life from months to years.

The analogML core is a complete analog processing chip that pulls a higher level of intelligence and inferencing into the analog domain. Using the analogML core, designers can move to a new analyze-first system architecture that uses near-zero power in order to determine data relevance at the earliest point in the signal chain, while the data are still analog, and ultimately keep downstream higher-power digital processors off unless additional, more detailed analysis is required. This cascaded approach to machine learning eliminates the wasteful digital processing of irrelevant data and delivers a system-level power efficiency that can extend battery life from months to years.

What’s in the analogML core?

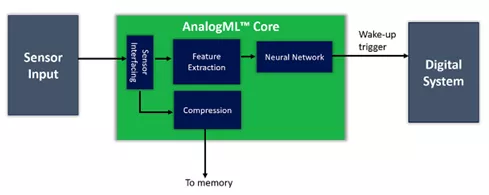

Looking under the hood of the analogML core reveals the difference between analog in-memory computing – where analog computing is used just for the neural network – and the analogML core — which consists of multiple analog processing blocks that are independently powered, software-reconfigurable, and programmed for various analyze-first applications (figure 1).

Figure 1: Software-programmable blocks inside the analogML core

These low-power analog blocks enable a wide range of functions, such as sensor interfacing, analog feature extraction, an analog neural network, and analog data compression to support applications such as voice activity detection, glass break or other acoustic event detection, vibration anomaly detection, or biometric monitoring.

Analog voice activity detection

Typical voice-first systems currently employ a digitize-first architecture that keeps both analog and digital systems on 100% of the time, continuously drawing 2000-5000 µA to capture and analyze all sound data to listen for a keyword. In contrast, the analogML core in a voice-first device enables an analyze-first architecture. In this case, only the analog microphone and analogML core are on 100% of the time, listening for the presence of voice – the only sound that could contain a keyword. This implementation reduces the always-on power draw to <100µA because the analogML core wakes up the digital processor for keyword analysis only when voice is detected in the analog domain (figure 2).

By keeping the higher-power digital system off for 80%-90% of the time, the analogML core extends battery life by up to 10 times. That means smart earbuds lasting for days instead of hours, or a voice-activated TV remote lasting for years instead of months on a single battery charge.

Figure 2: The always-on system power in a digitize-first system architecture (2000-5000µA) integrates analog circuitry for inferencing in the digital domain (top block). By contrast, the always-on system power of an analyze-first system architecture (<100µA) integrates analog circuitry for inferencing in the analog domain and extends battery life by 10x or more (bottom block).

AnalogML: a paradigm shift for analog computing

AnalogML goes far beyond using a little bit of analog computing in an otherwise digital processor for a small fraction of the overall ML chip calculations to save power. It’s a complete analog processing solution that determines the importance of the data at the earliest point in the signal chain – while the data are still analog – to both minimize the amount of data that runs through the system and the amount of time that the digital system (analog-to-digital converter/MCU/DSP) is turned on. In some applications, such as glass break detection – where the event might happen once a decade (or never) – the analogML core keeps the digital system off for 99+% of the time, extending battery life by years. This opens up new classes of long-lasting remote applications that would be impossible to achieve if all data, relevant or not, were digitized prior to processing.

Here’s the bottom line: All tinyML chips that incorporate analog circuitry and fall under the analog computing umbrella are not the same. No matter how much analog processing is included in a chip to reduce its power consumption, unless that chip operates completely in the analog domain, on analog data, it’s not doing the one thing that we know saves the most power in a system – digitally process less data.

To learn more, watch the video or download the AnalogML Core Technology Brief.

About Marcia Weinstein

Marcie Weinstein, Ph.D. brings more than 20 years of technical and strategic marketing expertise in MEMS and semiconductor products and technologies to Aspinity. Previously, she held multiple senior leadership roles in technical and strategic marketing as well as in IP strategy at Akustica, for which she later managed the company’s marketing strategy as a subsidiary of the Bosch Group. Earlier in her career, she was a senior member of the MEMS group technical staff at the Charles Stark Draper Laboratory in Cambridge, Mass. She received her Ph.D. in Solid State Physics from Lehigh University and her B.A. from Franklin and Marshall College. Connect with her on LinkedIn.

Marcie Weinstein, Ph.D. brings more than 20 years of technical and strategic marketing expertise in MEMS and semiconductor products and technologies to Aspinity. Previously, she held multiple senior leadership roles in technical and strategic marketing as well as in IP strategy at Akustica, for which she later managed the company’s marketing strategy as a subsidiary of the Bosch Group. Earlier in her career, she was a senior member of the MEMS group technical staff at the Charles Stark Draper Laboratory in Cambridge, Mass. She received her Ph.D. in Solid State Physics from Lehigh University and her B.A. from Franklin and Marshall College. Connect with her on LinkedIn.

Aspinity is a member of MEMS & Sensors Industry Group®(MSIG), a SEMI Technology Community that connects the MEMS and sensors supply network in established and emerging markets to enable members to grow and prosper. Visit us today.